Multimodal Journaling

for Parents of Premature Babies

- Categories: Interaction, Design, UX, Digital Health, Publication

- Year: 2017

-

Keywords:user research, multimodal interaction, parental engagement, digital health, visualization

Motivation

Based on the findings of the large scale user study with parents of premature babies, two aspects of the implementation of lumila, the needs for seamless interaction with the tool and presentation of babies' developmental data, were revisited.

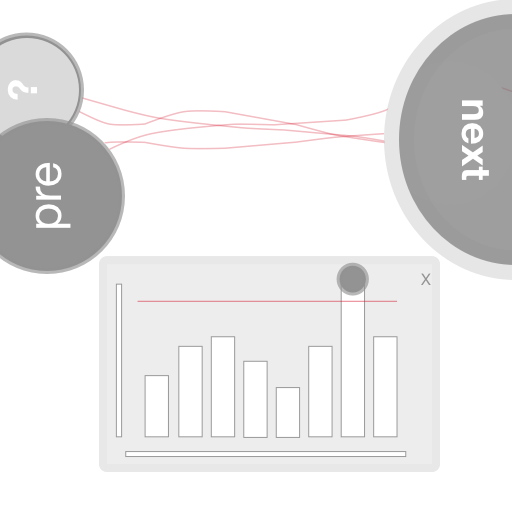

One of the feedbacks from the study was that parents do not want to spend their precious time bedside by staring at and typing into mobile devices. Instead, they want to focus on their baby's care. Interaction with any digital support tool must be seamless, with only the necessary amount of hints of affordance that support this, and does not distract the user, i.e. the parents, from their primary goal of being in an intensive care unit.

Next

The work for lumila continues, the goal is to bring multimodal interface capabilities to the parental engagement platform.

Updated January 2026: the work has been published:

Designing Multimodal Tools for Parents of Premature Babies (CHI 2016 Workshop) and

Multimodal Journaling as Engagement Tool for Parents of Premature Babies (Informatics for Health and Social Care, Taylor & Francis, 2025)

Implementation

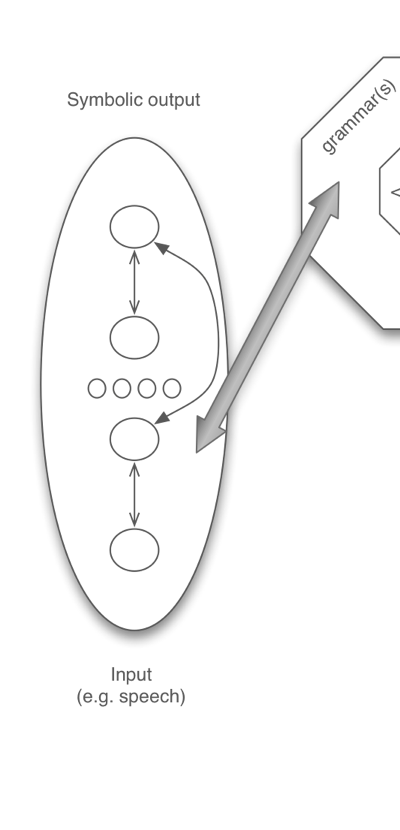

A gesture & speech modality based multimodal user interface solution was developed and tested with potential users. The collected data was used to train a hybrid (stochastic - heuristic) multimodal integrator that is capable of fusing incoming modality input channels into a single semantic interpretation with some 96% accuracy.

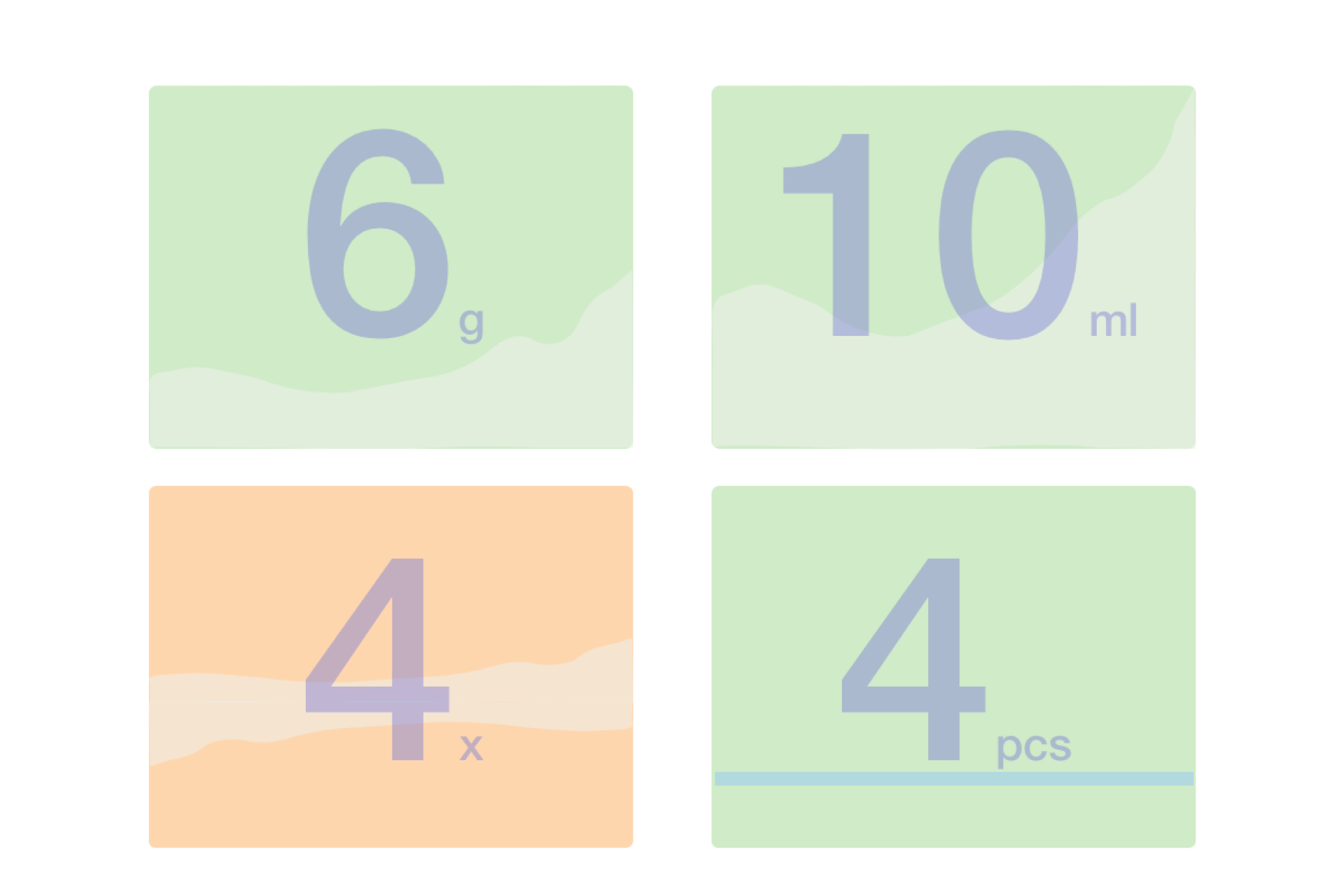

Above: the dashboard of parents with the babies' developmental data, as used in the prototyping and user testing phase, is presented.

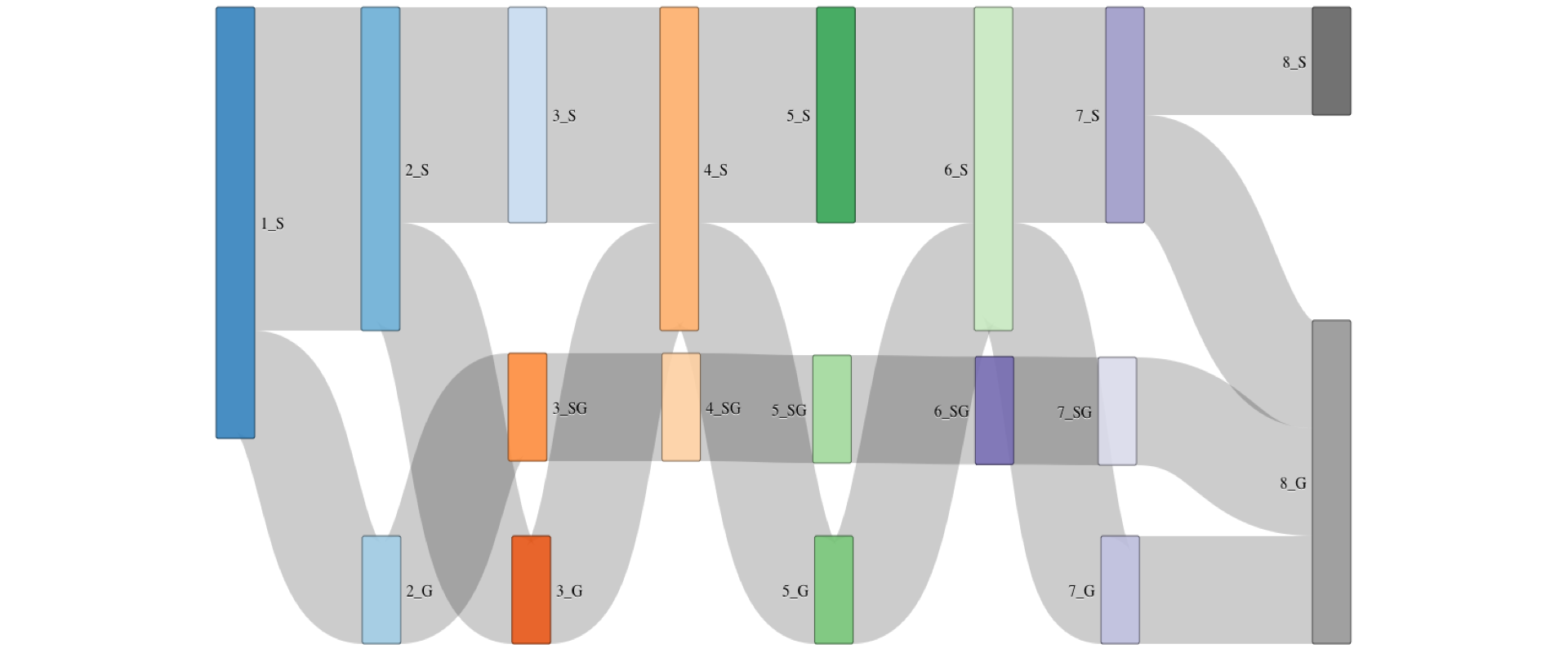

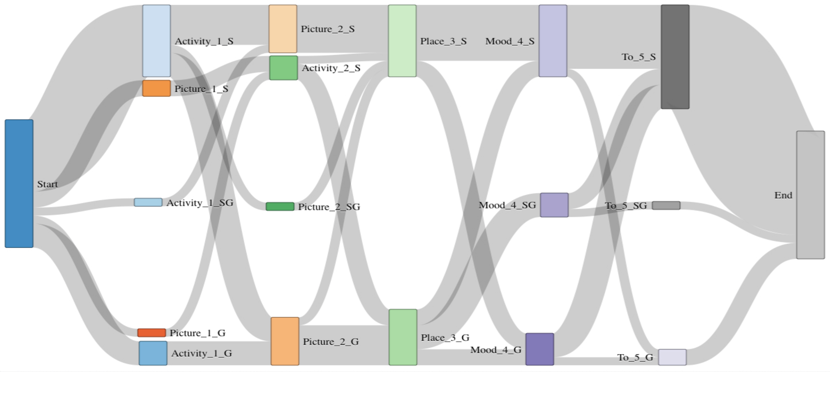

Below: the recorded multimodal interactions are visualized, using the same visualization technology

as in an earlier project. The users executed a predefined scenario (steps from left to right), either using speech-only modality (top path), gesture-only (bottom path),

or multimodal gesture+speech interaction patterns (middle path).